share

How to do qa testing for live streaming software?

How’s the performace of the live streaming software that users most care about? What is the standard of video and audio quality?

The core competibility in the future is to improve all quality indicators of live streaming platform based on the premise of meeting users’ requirements, perform special testing on fluency, clarity, sound quality, statibility and flow to increse audio and video calls quality.

Basic principle of audio and video calls

Firstly, let’s start from the basic principle of audio and video calls.

The basic flow of the audio and video is as following, although the process of audio and video are different in each link, the basic flow are the same:

Collection

Pre-process

Coding

Network transmission

Decoding

Post-process

Play

Video: camera, screen shot, etc.

Audio: microphone, Hook

Video: web glow, clarity optimization, etc.

Audio: noise suppression, noise cancellation, gain control

Video: H264, HEVC, VP8

Audio:SILK, CELT, iSAC

Bandwidth prediction, stable code rate, FEC, ARQ, etc.

Video: blocking effect cancellation, etc.

Audio: sound mixing

Video: screen

Audio: speaker, headset

1. Collection

It is necessary to use sensors on the hardwares like camera and microphone to collect, transmit, transform audio and video into computer digital signals. And the two-persons and multiple persons video are collected and played by ffmpeg plug-in.

2. Pre-process

It is necessary to do pre-precessing after the audio and video are collected. For audio, the pre-proccessing include automatic gain control(AGC), automatic noise suppresion(ANS), acoustic echo cancellation(AEC), voice activity detection(VAD), etc. And the pre-processing for video include video noise reduction, zoom, etc.

3. Coding and decoding

It is required to code and decode signal or data stream, which not only refer to coding of signals and data flow(generally for transimission, storage or encryption), but also an operation of extracting endcoded stream. There are various video codec, for example, vp8, vp9, MPEG、H264, etc. Audio codec can be devided into two geneal types: speech codec(SILK、Speex、iSAC, etc) and audio codec(ECELT、AAC, etc)

4. Network transimission

Generally, UDP or TCP transimission will be selected when in different network enviroments during the network transimission. In real-time audio and video calls, UDP is preferred due to its better flexibility and latency.

In addition, it will also deal with the loss in the transmission process, including control packet size, FEC mechanism, packet loss retransmission, Jitter control, latency, disorder, etc.

5. Post-processing

It will go to the post-processing link after the data is transmitted through the network to the recipient and decoded. In this process, it may be required to redo sampling or mixing, while it may need to eliminate blocking effects and frequency shift in time domain, etc.

6. Play and rendering

After post-processing, the process of digital signals being transformed into audio and picture is play and rendering. The common used audio play api in Windows system are DirectSound、WaveOut、CoreAudio.

Video quality standard

If you are interested in video quality standards and testing methods, keep reading.

1. Channel enter speed

Common network requirements: it takes less than 1 second to enter the live streaming channel(for iOS and Android).

Weak network requirements: there is no standard requirement for channel enter speed in weak network.

It is recommanded to use medium-end and low-end Android devices to test, while for iOS, iphone6s is recommanded.

Testing methods

Scenarios: all access to the live streaming channel should be covered include application itself, facebook, instagram, linkedin, youtube, snapchat, reddit, wechat, whatsapp, skype, etc.

Turn on the millisecond chronograph on one cellphone, while turn on the software to be tested on the other cellphone, and enter the live channel;

Stop the millisecond chronograph at the moment that the first frame comes out and record the data;

Repeat the steps mention aboved for 20 times and take the average data as the final result.

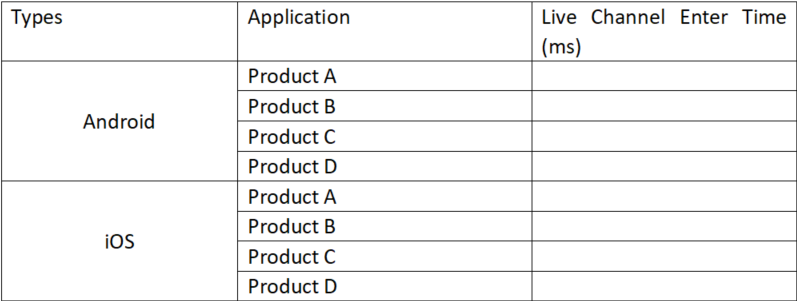

Competing products' data

2. Clarity

In normal network

Compared with the previous version, the clarity has not deteriorated.

In weak network

Compared with the data in normal network, the clarity when packet loss probability is 10% does not reduce apparently.

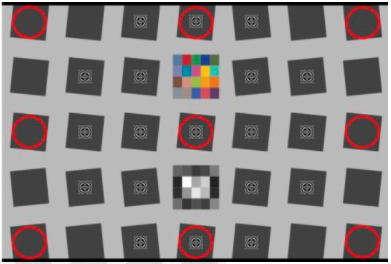

Tool: Imatest

Enviroment debugging

Set the distance between the camera and the targeted card to be 0.75m, the angle of light and card to be 45°to ensure there is no shadow in the surface of the card.

Preheat the light for 15 minuites when using the fluoresent light to test.

Measure the illuminance and color temperature of 9 points on the surface of the reflective card to ensure the consistency of the light, and adjust the position of the tested mobile phone to center the shooting position.

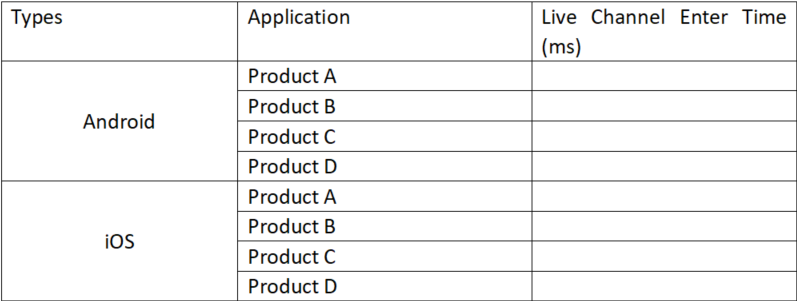

Competing products' data

3. Frame rate

In normal network

When the fram rate of the picture is higher than 16, our eyes will think it continuous due to its special biological structure. Therefore, it is recommanded that the frame rate is no lower than 16. When setting frame rate, it should consider comprehensively according to requirements and comparasion with competing products. It should be noted that when frame rate is lower than 5, our eyes can easily find out that the picture is uncontinuous and frozen.

In weak network

Compared with the frame rate in normal network, it doesn’t decrease apprently when the network loss rate is 10%.

Testing method

Devices: 2 computers + 1 camera + 2 cell phones

One computer is used for playing video, one for recording video, one cell phone is taken as host, and another is used for audience, the camera is used for collecting the picture of client-side.

Influencing factors

In normal network, the frame rate is mainly affected by video. The higher the video bitrate, the video bitstream with higher frame rate and higher resolution will be coded.

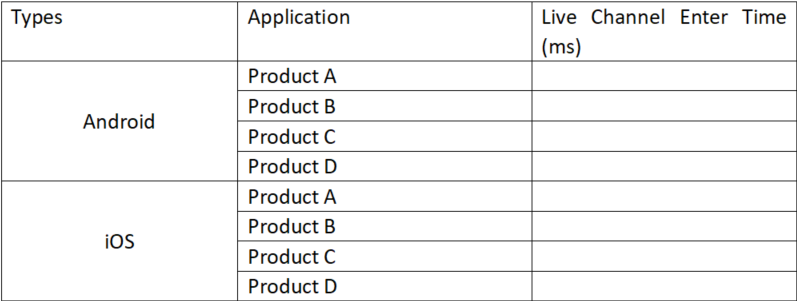

Competing products' data

4. Frozen times

Testing methods

Testing tools for iOS and Android devices can be used.

Influencing factors

In normal network, the frame rate is mainly affected by video. The higher the video bitrate, the video bitstream with higher frame rate and higher resolution will be coded.

5. The statibility of video quality

There is not blurred, black screen, or automatic interruption in the live streaming for 3 hours in the circumstances of all kinds of damage.

Testing method

Automatic damage test, and use videostudio for recording.

Check whether there is blurred, black screen or abnormal interruption in the recorded video.

Standards of sound quality

The sound quality standard is as following.

1. Sampling rate

In normal network

The audio sampling rate is bigger than 16k

In weak network

The audio sampling rate is bigger than 16k

The live video streaming and voice chat should be covered.

Testing method

Equipements: two cellphones, device for playing audio, voice recorder

Use one cellphone as a host, and the other as a audience;

Use the player to broadcast the voice(music) in the host side;

Use the player to broadcast the voice(music) in the host side;

Use adobe audition to check spectrum: if the highest spectrum is about 7000, then the sampling rate is about 16k.

2. Sound quality objective score

In normal network

When having live streaming in normal network, the average sound quality score should be ≥4

In weak network

When having live streaming in weak network, the average sound quality score should be ≥ 3.5

Testing method

Live streaming model:

Use the audio cable to record because there are two senconds’ time latency of live streaming, and then use SPIRENT device to test and get the score.

Equipments: two audio cables, one computer, two cellphones

Connect the microphone of the host side with the computer loudspeaker, and connect the loudspeaker of the audience side with the computer microphone;

Use the computer to loop playback the sample.

Turn on the adobe audition to record, the recording time is about 2 mins.

Cut the recorded audios in segments (10s for each segment, 3s for the blank speech in front of each segment)

Upload the cut audio into SPIRENT device and calculate the POLQA score.

Voice chat model

Use SPIRENT to test directly because the time latency is lower than 1 second

Start a voice chat between host and audience

Use SPIRENT to test sound quality, the bidirectional time is about 8 mins

Get the average sound quality score

3. Syn

Under normal network and weak network, the probability of audio and picture asynchrony is 0.

Testing method

In the process of watching the live broadcast, check whether the host's mouth and the voice in the video match up.

4. Voice chat-Noise Suppression

When the host and the audience have a voice chat, compared with the last version, the noise suppression has not deteriotated.

Testing method

Equipment: one audio cable, device for playing voice sample, one computer

Start voice chat between host side and audience side.

Put the cellphone of the host in the anechoic room, and then use the device to play the voice sample in the anechonic room.

Connect the soundspeaker of the audience side with the microphone of the computer.

Use Adobe Audition to recorde and save the file.

Use the same method to record the previous version(in the same testing enviroment)

Compare the new and old versions, select the same speech segment and noise segment, and calculate the signal-to-noise ratio.

5. Voice chat - echo cancellation

Standard

When there is a voice chat between the host and the audience, the echo is slight for each party whether it’s one person talks or multiple person talk at the same time, and the echo will not influence the conversation.

When only one person talks

Turn on the speaker of the audience side, and the host check whether there is echo of its own when speaking; conversely, the audience side speak and listen whether there is echo of its own.

When two persons talk at the same time

The both parties turn on the speaker, and talk at the same time, and then check whether there is echo or intermittent interruption.

6. Anti-jitter ability

Host side: uplink jitter latency is within 400ms, video streaming will not be affected.

Audience side: downlink jitter latency is within 400ms, video streaming will not be affected.

Testing method

Add 400ms upstream jitter to the host side, and check the video and sound of the audience side.

Add 400ms upstream jitter to the audience side, and check the video and sound of the audience side.

7. CPU usage

Host side

CPU is not exceeding 40% (iOS:iPhone6, Android: no designated models)

Audience side

CPU is not exceeding 30% (iOS:iPhone6, Android: no designated models)

Testing method

iOS

Connect Mac with a cellphone without jailbreak, use INSTRUMENT to record CPU and then calculate CPU usage by using the script.

CPU occupancy rate =(main process + mediaserverd + backboard) / number of cores

Android

Enter the command line through cmd command, enter adb shell top -m 10 >d:\xx.txt command to print the CPU consumption parameters of the phone, and then use script processing to extract the CPU consumption of video and mediaserver, The sum of the two is the CPU consumption of audio and video.

8. Flow

Compared with the previous version, the audio and video data that audience side receieve during the live streaming has not increased.

Testing method

iOS

Connect Mac with cellphone without jailbreak, turn on the terminal, enter: rvictl +cellphone identifier.

Enter su model, enter: tcpdump –i rvi0 –vv –s 0 –w xx.pcap.

Keep runing for 3mins, and then open the file with wireshark, check the fllow.

Android

Install the package capture gadget.

Click the gadget after the program starts running.

Open the generated pcap file with wireshark, and check the flow.

9. Power consumption

Use the audience side to watch the live streaming for 10mins, 20mins and record the consumption of cellphone power.

Test the previous version with the same cellphone in the same testing environment.

Compare the power consumption of the two versions.

10. Heat

Compared with the previous version, the heat generated when watching video streaming has not increased the power consumption.

Testing method

Use the audience side to watch the live streaming for 10mins, 20mins and then use the termometer to measure and record the back temprature of the cellphone.

Test the previous version with the same cellphone in the same environment.

Compare the power consumption of the two versions.

Related Post

MORE+

51Testing

51Testing October 20 2022

October 20 2022

51Testing

51Testing October 20 2022

October 20 2022